The Fundamentals of Quantum Computing

Quantum computing is based on the principles of quantum mechanics, which differ significantly from classical computing. At the core of quantum computers are qubits, or quantum bits, which unlike binary bits, can exist in multiple states at once due to superposition. Additionally, qubits can be entangled with each other, meaning the state of one qubit is directly related to the state of another, no matter the distance between them. This enables quantum systems to perform certain calculations exponentially faster than traditional computers.

Another defining characteristic is quantum interference, which allows quantum algorithms to amplify correct outcomes while cancelling out incorrect ones. Together, these phenomena form the foundation of quantum algorithms, such as Shor’s algorithm for factoring large numbers or Grover’s algorithm for searching unsorted databases. Understanding these principles is essential to grasping both the potential and the limitations of quantum computing.

Key Milestones and Technological Progress

Quantum computing has evolved from theoretical physics to functioning prototypes over the past several decades. The first significant milestone was in the 1980s, when physicists like Richard Feynman proposed that quantum systems could simulate physical processes better than classical ones. This was followed by the development of quantum algorithms in the 1990s that demonstrated potential computational advantages.

In the 21st century, companies like IBM, Google, and Rigetti have built quantum processors capable of handling dozens of qubits. In 2019, Google claimed “quantum supremacy” when its quantum computer solved a specific problem faster than any supercomputer could. Although the problem had limited practical use, the achievement symbolized a major leap forward in the field and intensified global interest and investment in quantum technologies.

Real-World Applications: Present and Future

While general-purpose quantum computers are still in development, niche applications are already emerging. In pharmaceuticals, quantum simulations can potentially model molecular structures and interactions far more efficiently than classical computers. This could lead to breakthroughs in drug discovery and materials science.

In finance and logistics, quantum algorithms show promise in optimizing complex systems, such as risk analysis or delivery routes. As quantum hardware improves, industries like cryptography, artificial intelligence, and energy may also benefit from quantum-enhanced algorithms. However, most applications remain theoretical until more scalable and error-tolerant quantum machines are built.

Challenges and Limitations of Quantum Hardware

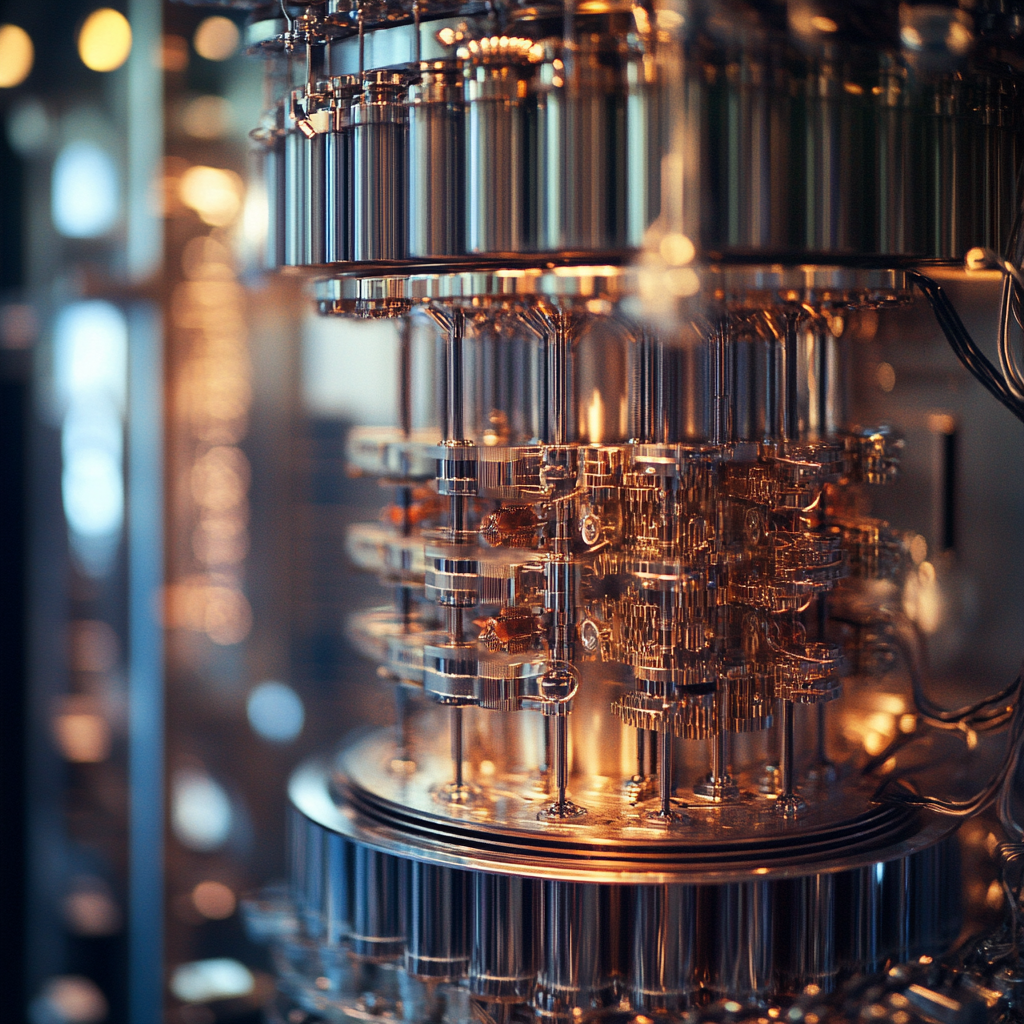

Quantum computers face significant technological challenges, primarily related to qubit stability and error correction. Qubits are extremely sensitive to environmental interference, causing them to lose their quantum state — a phenomenon known as decoherence. Maintaining coherence long enough to perform calculations is one of the biggest hurdles in building practical quantum devices.

Furthermore, quantum error correction is far more complex than in classical computing, often requiring many physical qubits to create one reliable logical qubit. Building systems with thousands or millions of qubits while keeping error rates low is a monumental task. These issues highlight that while the potential is vast, the road to usable quantum computers is steep and uncertain.

The Hype Factor: Media vs. Reality

Quantum computing is frequently portrayed in the media as a near-magical technology poised to change the world overnight. Sensational headlines often ignore the nuanced reality of slow progress, technical bottlenecks, and the limited nature of current quantum devices. This disconnect can lead to inflated expectations among investors, businesses, and the public.

While genuine breakthroughs have occurred, they are often incremental and highly specific. The reality is that we are likely many years away from quantum computers that outperform classical machines across a wide range of tasks. The current hype cycle risks undermining the credibility of the field if promises continue to outpace practical results.

Expert Perspectives and Industry Outlook

The future of quantum computing depends largely on sustained collaboration between academia, industry, and government institutions. Experts agree that while we’re still in the early stages, foundational work today is critical to enabling breakthroughs tomorrow. Most see quantum computing not as an immediate revolution, but as a long-term technological shift.

Key viewpoints include:

- Some researchers emphasize incremental development, focusing on near-term benefits such as quantum-inspired algorithms and hybrid classical-quantum systems.

- Industry leaders invest heavily in building scalable quantum hardware and infrastructure, with tech giants like IBM setting roadmaps for progress through the 2030s.

- Academic institutions continue to lead theoretical advances, contributing new models, error correction techniques, and hardware concepts.

- Governments across the world are establishing national quantum initiatives, offering funding, regulation, and collaboration platforms.

- Skeptics urge caution, stressing the importance of managing expectations and funding basic research without prematurely commercializing the technology.

Overall, quantum computing holds transformative potential — but realizing it will require time, realism, and persistent innovation.

Questions and Answers

Answer 1: Quantum computing uses qubits that leverage superposition and entanglement to perform calculations classical bits can’t.

Answer 2: Google claimed quantum supremacy in 2019 by solving a problem faster than any known classical supercomputer.

Answer 3: Applications include drug discovery, financial modeling, logistics optimization, and advanced material simulations.

Answer 4: Qubits are unstable and error-prone, requiring complex error correction and extremely controlled environments.

Answer 5: Media often exaggerates capabilities, while real progress is slow and highly technical with limited current utility.